Overview

HunyuanImage 3.0, introduced by Tencent in Sep 2025, is a groundbreaking native multimodal model that unifies multimodal understanding and generation within an autoregressive framework.

As the largest open-source image generation Mixture of Experts (MoE) model to date, it boasts 80 billion total parameters, with 13 billion activated per token, setting a new standard for performance and capability in the field of AI image generation.

Unlike prevalent Diffusion Transformer based architectures, HunyuanImage 3.0 employs a unified autoregressive framework. This innovative design enables a more direct and integrated modeling of text and image modalities, leading to surprisingly effective and contextually rich image generation. The model has been trained on a massive dataset of 5 billion image-text pairs, video frames, and interleaved image-text data, allowing it to achieve performance comparable to or surpassing leading closed-source models.

The release of HunyuanImage 3.0 is a milestone in the democratization of advanced AI image generation technology, providing a powerful, open-source tool for creators, developers, and researchers worldwide.

Key Strengths and Core Capabilities

HunyuanImage 3.0 introduces a range of powerful features that set it apart from other text-to-image models:

Unified Multimodal Architecture

The model's autoregressive framework allows for a more direct and integrated modeling of text and image modalities, resulting in more contextually rich and coherent image generation. This architecture moves beyond traditional DiT-based approaches to provide superior understanding of complex relationships between text descriptions and visual elements.

Superior Image Generation Performance

Through rigorous dataset curation and advanced reinforcement learning post-training, the model achieves an optimal balance between semantic accuracy and visual excellence. It demonstrates exceptional prompt adherence while delivering photorealistic imagery with stunning aesthetic quality and fine-grained details.

Intelligent World-Knowledge Reasoning

The unified multimodal architecture endows HunyuanImage 3.0 with powerful reasoning capabilities. It leverages its extensive world knowledge to intelligently interpret user intent, automatically elaborating on sparse prompts with contextually appropriate details to produce superior, more complete visual outputs.

Ultra-Long Text Understanding

The model supports thousand-character level complex semantic understanding, a rare feature among open-source models. This allows for the creation of highly detailed and specific images from complex, nuanced prompts that would overwhelm other text-to-image systems.

Precise Text Rendering

HunyuanImage 3.0 excels at generating legible and aesthetically pleasing text within images, making it ideal for creating posters, infographics, and designs that require accurate text representation. The model can handle multiple languages and various font styles with remarkable precision.

Bilingual Support

The model offers robust support for both English and Chinese, enabling high-quality image generation from prompts in either language. This bilingual capability extends to text rendering within images, supporting mixed-language compositions.

Practical Examples

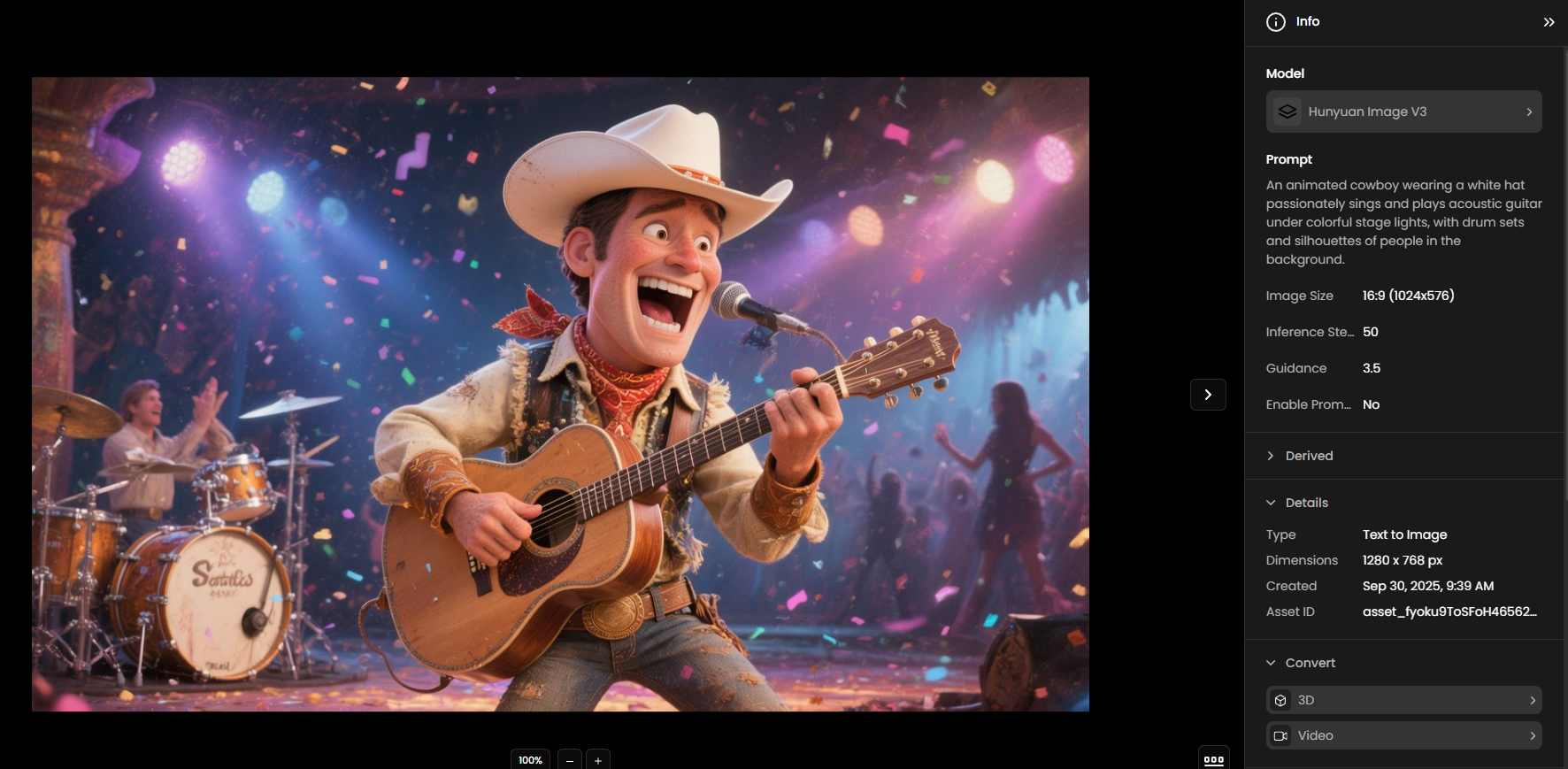

1. The Country Star Performance

Prompt:

An animated cowboy wearing a white hat passionately sings and plays acoustic guitar under colorful stage lights, with drum sets and silhouettes of people in the background.

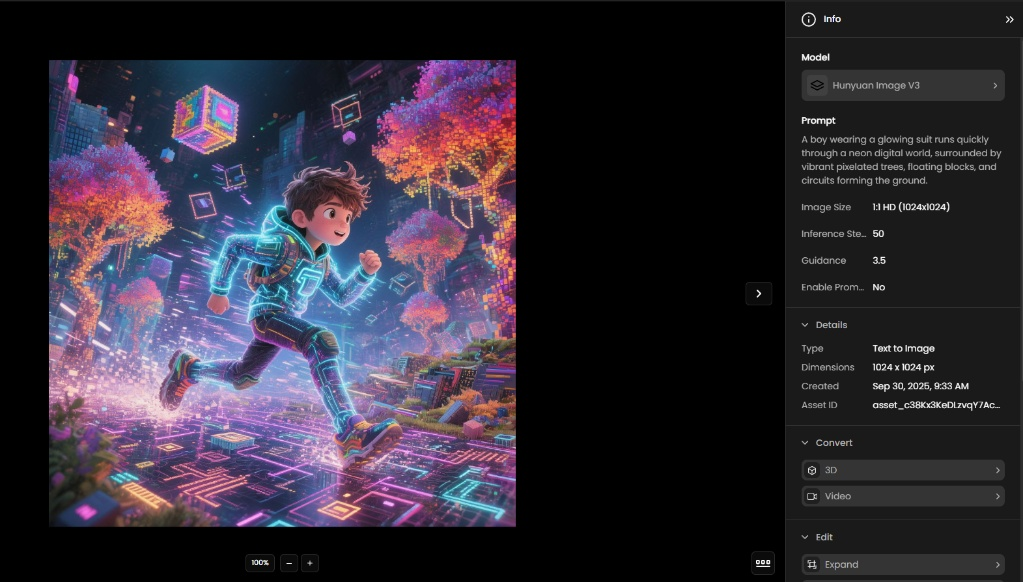

2. Sprinting Through Cyberspace

Prompt:

A boy wearing a glowing suit runs quickly through a neon digital world, surrounded by vibrant pixelated trees, floating blocks, and circuits forming the ground.

✅ This set demonstrates:

Unified Multimodal Architecture → blending text + visuals seamlessly (esp. #12, #14).

Superior Image Generation Performance → realism & fidelity (#1–5).

World-Knowledge Reasoning → historically/contextually accurate (#11, #13).

Ultra-Long Text Understanding → complex prompts with multiple conditions (#15).

Precise Text Rendering → multilingual + infographic style (#12, #14).

References

[1] Tencent-Hunyuan. (2025). HunyuanImage-3.0: A Powerful Native Multimodal Model for Image Generation. GitHub. Retrieved from https://github.com/Tencent-Hunyuan/HunyuanImage-3.0

[2] Milo, C. (2025). Tencent Hunyuan Image 3.0 Complete Guide - In-Depth Analysis of the World's Largest Open-Source Text-to-Image Model. DEV Community. Retrieved from https://dev.to/czmilo/tencent-hunyuan-image-30-complete-guide-in-depth-analysis-of-the-worlds-largest-open-source-57k3

Was this helpful?