Video generation is a powerful AI capability that transforms static images and/or text descriptions into dynamic content. With video generation now available in Scenario, understanding the fundamentals will help you integrate this feature effectively into your creative workflow.

What is AI Video Generation?

AI video generation uses advanced machine learning models to create short video clips based on either text prompts (text-to-video) or static images (image-to-video). Different models have been trained on different datasets of video content, allowing them to understand the relationship between visual elements and natural movement patterns.

Video generation capabilities include two primary approaches:

Text-to-Video (T2V)

Text-to-video generation creates video content based solely on textual descriptions. You provide a detailed prompt describing what you want to see, and the AI generates a video matching that description.

Requires no visual input, only text descriptions

Offers flexibility, but may not precisely match your intended style or exact visual concepts

Ideal for visualizing entirely new concepts quickly

Typically requires more detailed prompts for precise results

Image-to-Video (I2V)

Image-to-video generation animates existing static images, bringing them to life with natural movement while maintaining the visual style and composition of the original image.

Uses your existing image as a starting point

Maintains higher visual consistency with your original artwork and provides more predictable results than text-only generation

Can be guided with additional text prompts for specific motion

Example use case: A marketing professional has one product photo of a perfume bottle and wants to create an elegant video for social media, so they animate the image with subtle reflections and a gentle rotating movement.

Some models let you use multiple input images or frames (especially for the final frame). You may use the same frame for start and end positions, or use “Scene Elements“ to combine different elements. Some models also allow manually selecting (masking) specific areas to better guide the movement.

Key Considerations for Video Generation

When working with AI videos, keep these important factors in mind:

Duration & Resolution Tradeoffs

Most video generation models produce short clips (typically 5-12 seconds, sometimes longer) at resolutions ranging from 480p to 1080p. These limitations exist due to the computational complexity of generating consistent video content. Duration and resolution capabilities vary by model, with some optimized for higher quality at the expense of shorter duration, and others offering longer clips at lower resolutions.

Style Consistency

Video generation models can maintain the visual style of reference images or create content that matches style descriptions in your prompts. For the most consistent results:

Be explicit about the desired visual style in your prompts

When using image-to-video, ensure your reference image(s) have a clear, defined style

Consider how movement might affect the perception of style elements

Scenario has the necessary tools to generate images with specific styles using custom models. In addition, we have Edit with Prompt, which is capable of generating various scenes and angles, as in the example below, where, based on the generated image of the metal box, the instruction was given to generate the final frame of the open box.

Using these two images, a detailed prompt, and the Pixverse v4.5 model, this video was generated:

Motion Control

Controlling the type and quality of motion is crucial for achieving your creative vision:

Specify camera movements in your prompts (e.g., "slow pan," "zoom in," "tracking shot")

Describe the desired motion of key elements (e.g., "hair gently blowing in the breeze")

Consider the physics of your scene (e.g., how fabric, liquid, or light might naturally move)

Here is the prompt used to generate the metal chest video shown above:

A sleek futuristic crate sits closed on a neutral background. Soft mechanical hums begin to emit from it as glowing blue lines across its surface slowly brighten, pulsing with rising energy. The crate vibrates slightly, and internal mechanisms shift with subtle clicks and whirs. A faint glow begins to escape from the seams as the energy builds. Tension rises, the lights pulse faster, a high-pitched charge-up sound intensifies. Then, in a sudden and abrupt burst, the crate springs open forcefully. A blinding golden light shoots upward from within, illuminating the lid and surroundings. The light radiates with heatwaves and glow, suggesting something powerful and valuable is inside. Dust particles float in the energized air. The energy buildup and sudden release should feel dramatic and satisfying.

Prompt Crafting

Effective prompts are essential for getting the results you want:

Be specific about subjects, actions, and environment

Include details about lighting, atmosphere, and mood

Specify camera angles and movements

Reference visual styles or cinematography techniques when relevant

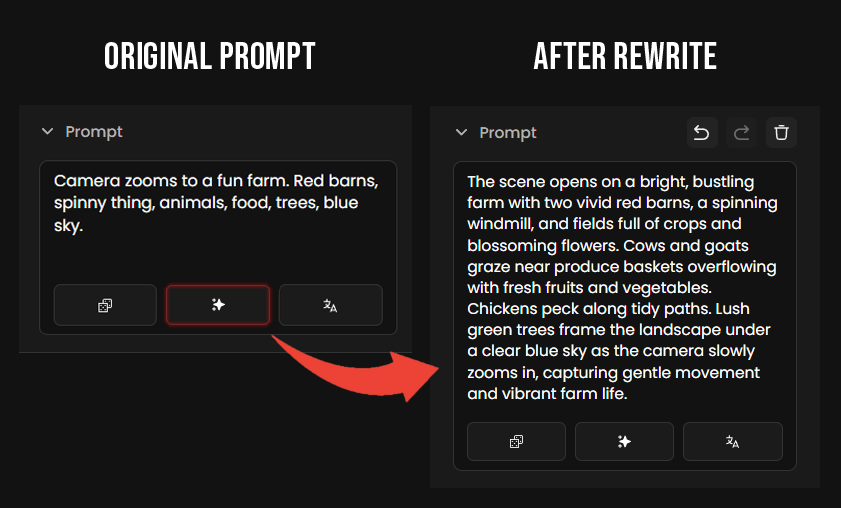

We highly recommend using Scenario’s “Prompt Spark” (prompt assistant tools) when generating videos - especially while you’re learning how to prompt and work with these models.

Prompt Spark helps you build well-structured prompts that include all the essential details: subject, movement, style, and camera behavior, ensuring more successful video results. (Craft Effective Prompts)

Getting Started with Video Generation in Scenario

Scenario makes it easy to incorporate video generation into your creative workflow:

You can launch the Video Generation tools in several ways within Scenario:

From an individual image: Open the image you want to edit, click the three-dot icon in the top-right corner, and select "Send to / Video". This will open the tool with the image already loaded into the interface.

From any gallery view: Whether browsing generated images or exploring your library, hover over any image thumbnail and click the three-dot icon in the top-right corner to find the "Send to / Video" option.

Via the main navigation panel: Go to Video in the panel, to open the tool from scratch. No image will be pre-loaded. You can also find it under the "All Tools" page.

Direct link: Access the tool directly via this URL: https://app.scenario.com/videos

Once you’re in the video generation interface:

Select a Model: By default, Seedance will be loaded, but you can choose from dozens of video generation models based on your specific needs in the top-left section of the interface (see "How to Choose the right video Model" guide for detailed comparisons).

Prepare Your Input: Craft a detailed prompt or select a high-quality reference image. Don’t forget to leverage the prompt assistance tools available (like Prompt Spark).

Generate, Review, and Iterate: Evaluate the results and refine your prompt or input image as needed (see “Troubleshooting AI Video Generations”).

Common Applications

Video generation opens up numerous creative possibilities across different fields. Below are example workflows - and potential models to test for each:

For Game Artists

Animate character concepts to visualize movement and personality (Kling 2.1 or Veo 3)

Create dynamic environment previews from concept art (Seedance Pro)

Generate promotional content for game marketing (Kling 2.1 Pro or Pixverse V5)

Visualize special effects and abilities before implementation (Kling 2.1 Pro)

For Designers

Bring illustrations and graphic designs to life (Wan 2.2 A14B)

Create animated versions of logos and brand elements (Kling 2.1)

Develop dynamic mockups for client presentations (Seedance Pro)

For Marketing Professionals

Transform product photography into engaging video content (Veo 3)

Create eye-catching social media assets (Kling 2.0 or Pixverse v4.5)

Develop quick concept videos for campaign pitches (Seedance Lite)

For Content Creators

Add motion to static artwork for portfolio enhancement (Wan 2.2 A14B)

Create short animated sequences for larger projects (Kling 2.1 Pro)

Generate background elements for video productions (Seedance Lite)

Editing Your Own Videos in Scenario

In addition to generating videos from text or images, Scenario also allows you to edit and transform your existing clips.

You can restyle visuals, reframe shots, replace subjects, or expand scenes, all from a single prompt.

This makes it easy to combine generation and editing within the same workflow:

Generate your video using any of Scenario’s models.

Then use editing models like Runway Aleph, Luma Reframe, or Wan 2.2 to refine, restyle, or adapt it for different formats and platforms.

Conclusion

Video generation represents a new frontier in AI-powered creativity, allowing you to bring static concepts to life with unprecedented ease. By understanding fundamentals, you're now ready to explore Scenario's video generation capabilities and incorporate this powerful tool into your creative workflow.

In the next articles, we'll dive deeper into model selection, prompt engineering strategies, and troubleshooting for getting the most out of video generation.

Was this helpful?