Add custom prompt to your model to better guide output for users

Prompt embedding is a technique used in generative AI to enhance the input prompts by embedding additional context or instructions directly into the prompt. This helps the AI model to better understand the user’s intent and produce more accurate and relevant results.

There are two main types of prompt embedding:

Positive Prompt Embedding: Adds helpful context or instructions that guide the AI towards the desired output.

Negative Prompt Embedding (only for Stable Diffusion models): Specifies what the AI should avoid or exclude in its response.

Enhanced Accuracy: By providing additional context, the AI can generate more precise and contextually appropriate outputs.

Consistency: Ensures the AI adheres to specific guidelines or standards consistently across all outputs.

Customization: Tailors the AI’s responses to better fit specific use cases or user preferences.

To configure prompt embedding on our platform, follow these steps:

Navigate to Your Model Page

Go to the model page where you want to configure prompt embedding.

Access the Details Tab

Click on the Details tab to find the configuration settings.

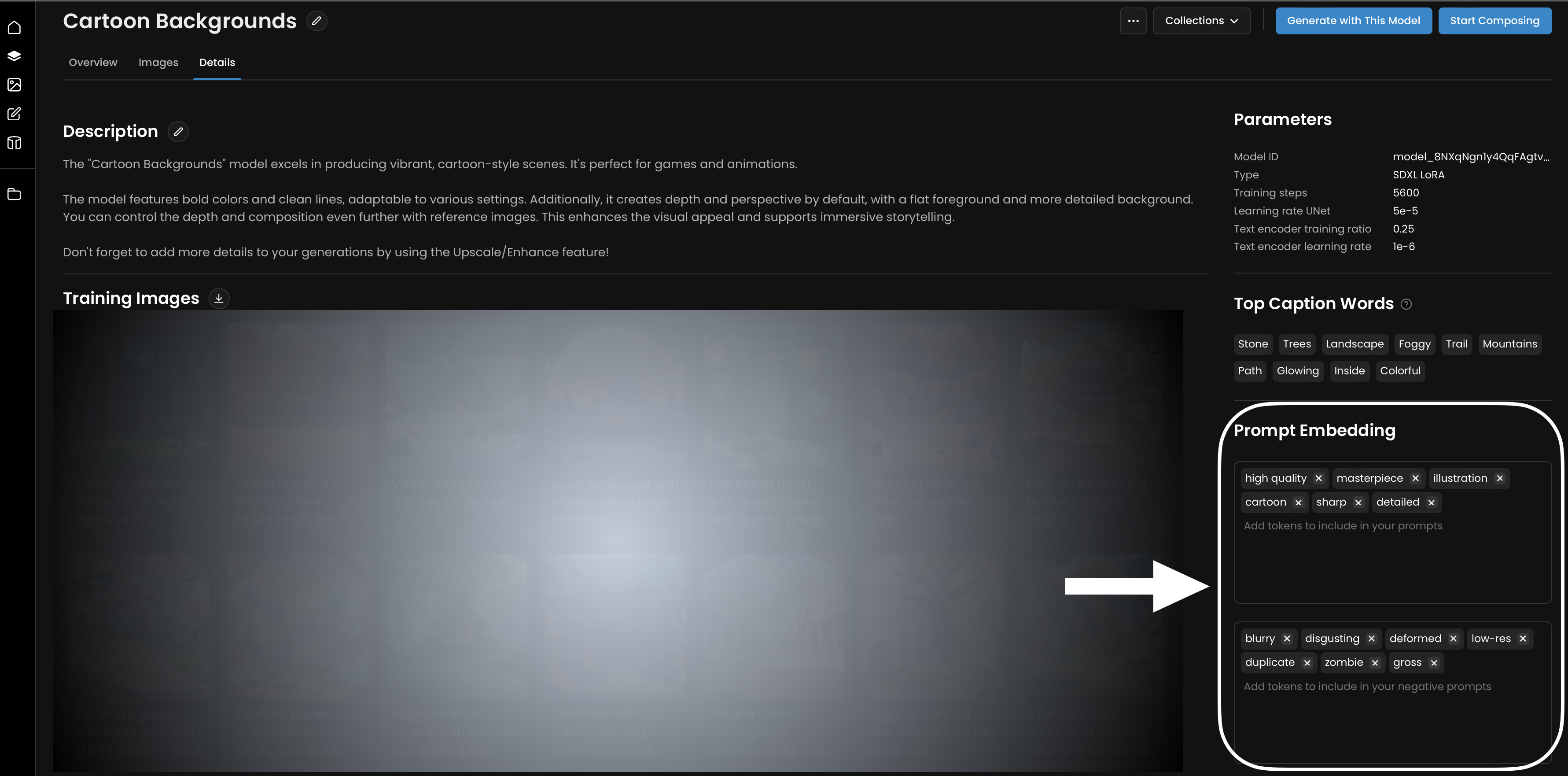

Configure Prompt Embedding

You will see options to configure both prompt embedding and negative prompt embedding.

Definition: Embeds additional context or instructions into the user prompt.

Usage: Enter the contextual information or guidelines that you want to be consistently included in the prompt. You can typically add keywords regarding good quality or a specific style you want to apply to your model.

Definition: Specifies elements to avoid or exclude in the AI's output.

Usage: Enter the constraints or exclusions that you want the AI to adhere to. You can typically add keywords regarding bad quality or a specific style you want to avoid.

When you configure prompt embedding, these embeddings do not appear in the prompts during model generation. Instead, they are seamlessly added to the user's input prompt behind the scenes. This ensures that the AI model always receives the full context without the user needing to manually enter it every time.

It's important to note that configuring prompt embedding is only available if you own the model. This ensures that only authorized users can modify the fundamental behavior of the proposed model.

Prompt embedding is a powerful tool to enhance the performance and customization of generative AI models. By embedding additional context or constraints directly into the prompts, you can achieve more accurate, consistent, and tailored outputs. On our platform, configuring prompt embedding is straightforward and can significantly improve your AI model's effectiveness.

Was this helpful?

Quentin