Understand the key features and settings of our training interface, helping you optimize model creation with ease.

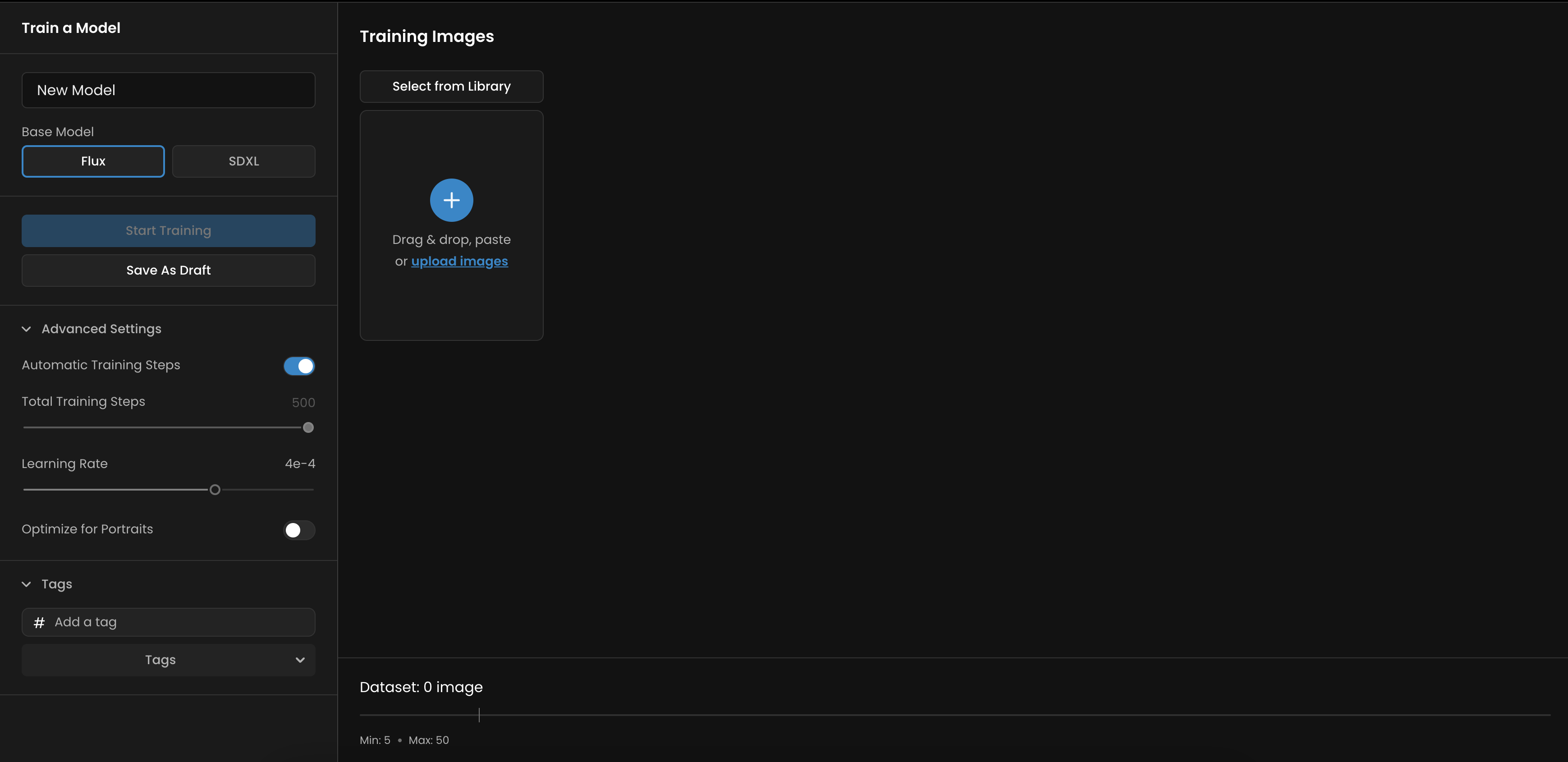

To start training a model, navigate to the left menu and click on Model > New, then select Training. This will bring you to the main training interface, where you can configure your model for optimal results.

Naming Your Model The first field allows you to give your model a name. Choose a name that clearly represents the purpose or subject of the model.

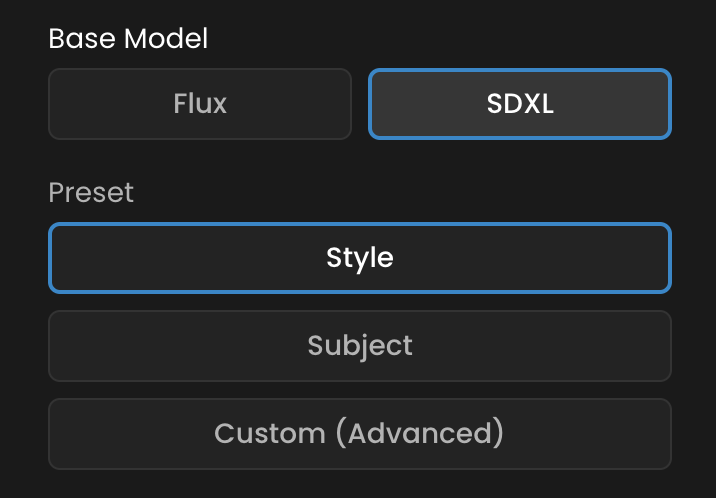

Selecting the Training Type You must choose between Flux and SDXL as your training type.

SDXL: When selecting SDXL, you can access advanced presets optimized for different training goals:

Style: For training a specific artistic style. - More info on training a Style here

Subject: For training a particular character or object. - More info on training a Character here

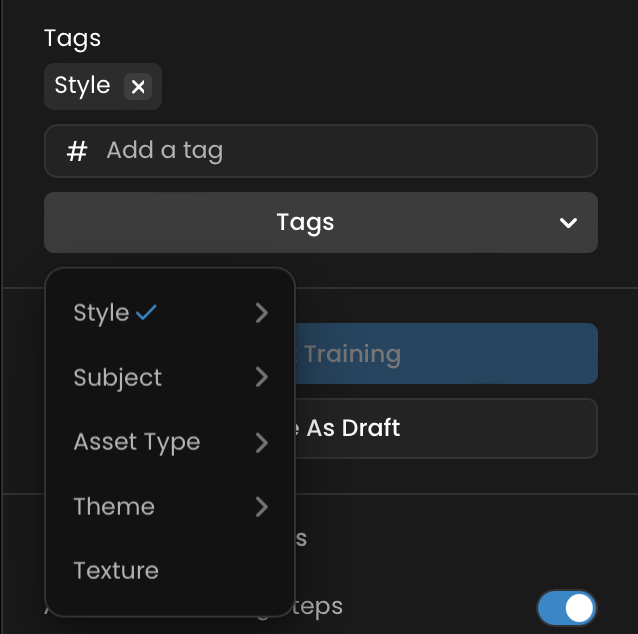

Tagging Your Model Tags help organize and categorize your model. You can either select tags from a predefined list or create custom tags by typing the word and pressing Enter. It will then help you to find your model using filters on Model modal. You can also tag your model later. More info on our Tag article

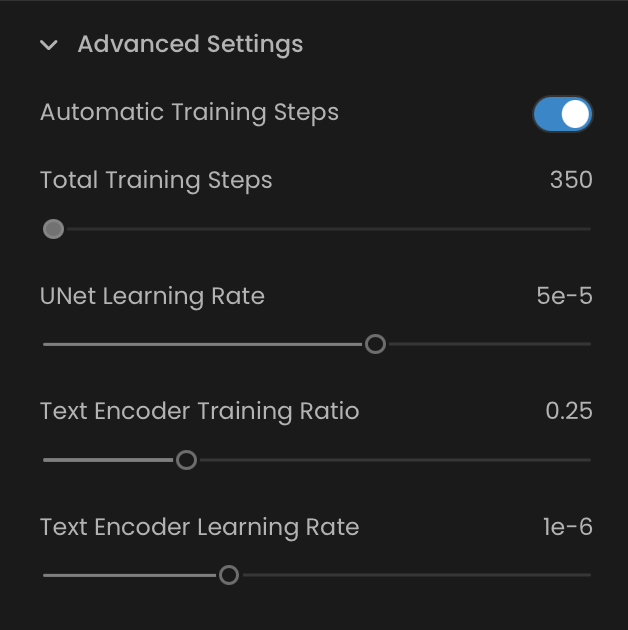

Advanced Settings For users looking to fine-tune their model training, the advanced settings offer several adjustable parameters:

Number of Training Steps: This defines how many cycles the AI will take to process the entire dataset.

For SDXL, the default recommendation is 350 steps per image. For larger datasets (over 30 images), consider reducing the steps to 50-100 per image.

For Flux we recommend 500 training steps + 50 per training image.

Note: The number of training steps is crucial as it determines how thoroughly the model learns from your dataset. While more steps can lead to a more accurate model, they can also increase the risk of overfitting, where the model starts memorizing the training data instead of generalizing it.

UNet Learning Rate: This parameter controls the speed at which the AI learns from the images in your dataset. A higher learning rate speeds up training but can make the model more prone to overfitting by not fully capturing the underlying patterns in the data. A lower learning rate allows for more detailed learning but can slow down the training process.

Note: Typically, you will want a lower learning rate for a large dataset with many training steps, and a higher learning rate for a smaller dataset with fewer training steps. Adjust these settings incrementally to find the right balance for your specific dataset.

Text Encoder Training Ratio: This represents the proportion of total training steps dedicated to the text encoder, which is responsible for interpreting captions. A higher ratio increases the focus on captions, enhancing the model’s ability to understand and apply text descriptions accurately.

Note: The text encoder's influence is particularly important when training models with specific, detailed subjects. For style training, where the AI already understands many concepts, a lower ratio is typically sufficient. For subject training, increasing the ratio can help maintain consistency in how the model interprets specific details.

Text Encoder Learning Rate: Similar to the UNet learning rate, this parameter adjusts how quickly the text encoder learns from the captions.

Balancing Training Steps and Learning Rate:

Training Steps: These refer to the number of iterations the model undergoes during training. While more steps can lead to a more accurate model, excessive steps can cause overfitting. The goal is to find a 'sweet spot' where the model is well-trained without overfitting.

Learning Rate: This dictates the speed of the training process. A higher learning rate can result in faster training but may lead to overfitting, as the model might not capture the underlying patterns in the data. Conversely, a lower learning rate promotes more thorough learning but at a slower pace.

General Strategy: Start with a lower learning rate and increase the number of training steps incrementally. Observe how your model behaves with these adjustments and fine-tune as necessary.

UNet vs. Text Encoder:

UNet: This component primarily affects how the images in your dataset influence the model. Adjusting the UNet learning rate impacts how strongly the visual aspects are integrated into the model.

Text Encoder: This component influences how well the captions are understood and associated with the images. The Text Encoder Training Ratio and Learning Rate can be adjusted based on whether you’re focusing on style or subject training.

Consistency and Testing:

Ensure consistency in your approach across the dataset. This helps the AI model learn and apply concepts more effectively.

Test different combinations of training steps and learning rates to determine what works best for your specific dataset and goals. Keeping training steps above 500 is generally recommended, but the optimal number depends on your specific project.

The training interface provides a flexible and powerful environment to create highly customized models. By understanding and carefully adjusting the parameters, you can train models that are finely tuned to your specific needs. Start with the presets and experiment with advanced settings as you gain confidence and understanding in model training.

Was this helpful?

Quentin