Discover essential strategies and methods for gathering and curating high-quality training data to create robust and versatile AI models for character or style generation.

If you don't have a dataset readily available, there are several methods to gather and create training data for your character or style model:

Create Your Own: Hand-draw or digitally create artwork using tools like Blender, Photoshop, or other graphic design software. Ensure consistency in key features, such as character design or style elements, while varying poses, expressions, and backgrounds. This method gives you full control over the data and ensures it aligns perfectly with your vision.

Source from Existing Content: Explore royalty-free image websites or stock photography platforms to find suitable images. Select those that align with your character’s features or desired style while ensuring there’s enough diversity to make the training effective. This approach allows you to build a dataset quickly using high-quality, legally compliant images.

Generate Using Character or Style Reference: Utilize Scenario's built-in Character or Style Reference modes to generate a targeted subset of training images. This feature is designed to help you create consistent, high-quality training datasets tailored to specific characters or styles. For more detailed guidance, refer to our dedicated articles on Training a Character LoRA and Training a Style LoRA. Once generated, refine and upscale these images to ensure they are of the highest quality. Additional tips can be found in our article on Enhancing Images.

Remember: Curation is key. Carefully select and refine your training data to ensure consistency in core features while providing enough variety to build a versatile and robust model.

Dataset Size and Scope: Start with a small dataset of 5-15 images, potentially expanding to 30 or more as needed. The dataset should be large enough to provide meaningful patterns for the model to learn from, but not so large that it becomes difficult to manage.

Consistency vs. Variety: Maintain consistency in the aspects you want the model to focus on, such as specific subjects or stylistic elements. However, introduce variety in other areas to prevent the model from overfitting or memorizing irrelevant details.

Image Quality: High-resolution, square images work best for training. Use at least 1024x1024 dimensions for Flux and SDXL models. You can crop and resize images directly in the Scenario web app to meet these specifications.

Testing Different Datasets: Don’t hesitate to experiment with various datasets to determine what works best for your specific needs. Testing can reveal valuable insights and help you refine your approach.

Avoid Overfitting: Avoid using too many similar images, as this can cause the model to become overly specialized and less adaptable. Strive for a balance between consistency and diversity.

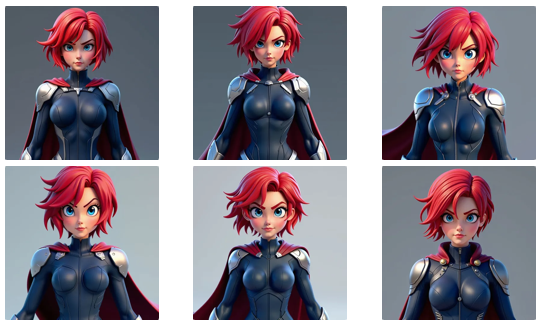

Diverse Imagery: Include images that showcase your character or object in various poses, lighting conditions, and angles. Incorporate different facial expressions, body positions, and full-body shots to enhance the model’s flexibility and accuracy.

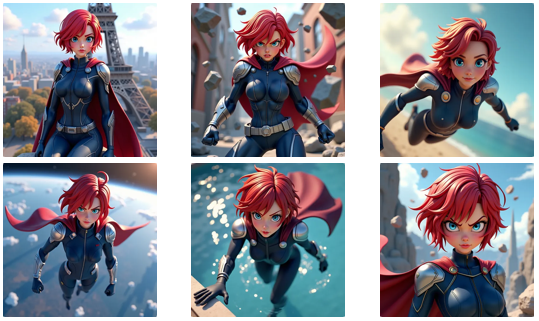

Contextual Variety: Place your character or object in a variety of contexts and settings. This helps the model understand and generate the character or object in different scenarios, making it more versatile.

Mixing Image subjects can lead to poor results:

Adding Backgrounds: To create a realistic model, include images with backgrounds. This allows the model to learn how the subject interacts with its environment, enabling it to generate more contextually rich images.

To generate images of a subject from multiple perspectives, ensure your dataset includes examples showing the subject from various angles.

Bad dataset:

Good dataset:

Group of Subject: If you want the model to generate images with your subject in a group, include multiple examples of group scenes in the dataset.

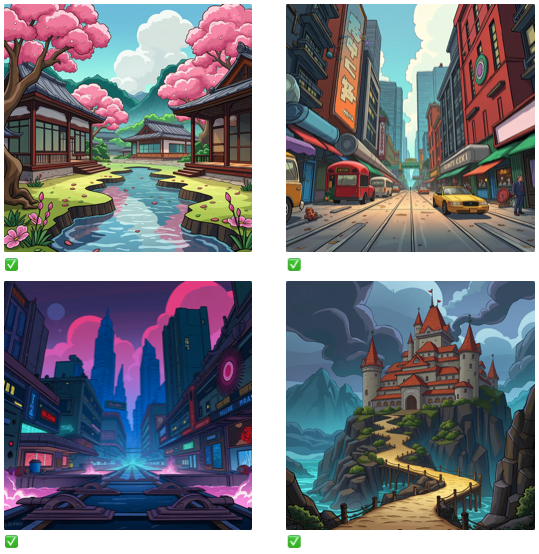

Consistent Styling: Ensure that your dataset uniformly represents the style you’re training for. For example, if you’re focusing on an anime style, use consistent tagging and descriptions related to that genre.

Focused Imagery: Only include images that match the desired style. If you’re training a model on your unique art style, limit the dataset to images created in that style to maintain coherence.

Image Cleaning: Remove unwanted elements, such as stray objects or signatures, from your images to keep the dataset clean and focused on the style you want to train.

You should share the same artistic style in your dataset:

Consistent Visual Style: Ensure the visual style remains consistent throughout. For instance, do not combine sketched portraits and digitally painted portraits in the same dataset. Maintaining a uniform style enables the model to learn and reproduce that particular aesthetic more effectively, rather than having to reconcile contrasting visual approaches. Preserving stylistic cohesion in the dataset leads to more cohesive and true-to-life generated images. Here is a consistent perspective for Isometric Style:

Was this helpful?

Quentin