An effective way to build a strong Flux Kontext training dataset is to “work backwards“ from your desired final result.

Instead of trying to create paired datasets from scratch, you may start with your target style, character, or effect and then use AI editing tools to create the "before" images. This “reverse engineering“ approach is not only more intuitive but also ensures a tight alignment between your input and output pairs.

The BACKWARD Approach: Core Concept

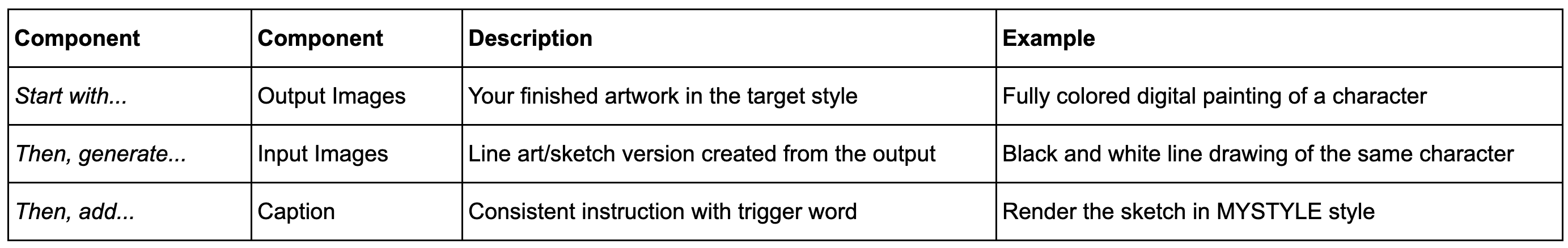

A traditional approach of creating datasets of pairs from scratch can be long and inefficient. The BACKWARD method flips this process:

Start with "AFTER" images - These are your existing, final desired results (your style, character, or effect)

Generate the "BEFORE" images - Use AI editing tools like Gemini 2.5, Seedream Edit, or even Flux Kontext itself to reverse-engineer the input

Create consistent captions - Use the same (or similar) instructional format across all pairs

This approach guarantees perfect training pairs because you're working from a known good result backwards to create the input, rather than trying to predict what the output should look like.

Practical Example: Building a Consistent Colorizer LoRA

Let's walk through a concrete example of building a dataset for a colorizer that can render sketches in your unique artistic style.

Step 1 - Collect Your "AFTER" Images (Output/Target)

Start with 15-20 images in your desired final style. These could be your own artwork in a specific style, images you've commissioned or any consistent artistic style you want to replicate.

Step 2 - Generate Your "BEFORE" Images (Input)

Take each of your "after" images and use Gemini 2.5, Seedream Edit, or another AI editing model (like Gemini 2.5, Seedream or Flux Kontext) to convert them to line art/sketched and remove color while preserving structure. You will AI-generate clean, consistent sketch versions.

Step 3 - Add Consistent Captions

For every pair, use the same caption format, such as “Render the sketch in MYSTYLE style”, where MYSTYLE is your unique trigger word.

After you train the LoRA, use it on Scenario with new sketch images. Result: Now you can colorize any sketch in your unique style by using the trained LoRA! Simply upload a sketch as reference, and this instruction as your prompt: “Render the sketch in MYSTYLE style” (add more details if necessary: colors, etc)

Other Use Cases

Use Case 1: Style Transfer Kontext LoRA

Goal: Transform any photo into a specific artistic style (watercolor, oil painting, anime, etc.)

“BACKWARD” Process:

Collect "AFTER" images: 10-20 images in your target artistic style

Generate "BEFORE" images: Use Gemini 2.5 to convert these to realistic photos or “neutral“ versions

Caption (instruction) suggestion: “Transform this image into MYSTYLE style” (if using a trigger word)

Use Case 2: Character Consistency Kontext LoRA

Goal: Generate a consistent character from any other character image, across different scenes and poses

“BACKWARD“ Process:

Collect "AFTER" images: 10-20 images of your character in various poses/settings or scenes

Generate "BEFORE" images: Use editing tools (like Gemini 2.5 or Ideogram Character with the mask/inpainting option) to replace your character with random people/face, while keeping backgrounds, outfits or settings identical

Caption (instruction) suggestion: “Replace the person with MYCHARACTER” (if using a trigger word)

Use Case 3: Object Addition Kontext LoRA

Goal: Consistently add the same specific object(s) into input images

“BACKWARD“ Process:

Collect "AFTER" images: Images with the object you want to add/remove

Generate "BEFORE" images: Use AI editing (Gemini 2.5, Kontext, Seedream, Qwen Edit…) to remove/add the object

Caption (instruction) suggestion: Add [MYPRODUCT/object] from this image (if using a trigger word)

Use Case 4: Pose/Expression Modification LoRA

Goal: Change poses or expressions while maintaining character identity

BACKWARD Process:

Collect "AFTER" images: Images of people in your target pose(s)/expression(s)

Generate "BEFORE" images: Use AI editing (Gemini 2.5 or Seedance) to change poses/expressions to neutral states

Caption (instruction) suggestion: Change the pose to [description] or Make the person [expression]

Practical Steps:

Advanced Techniques & Tips

Bootstrapping with Existing Models:

Use Flux Kontext itself to create your "before" images by reversing transformations. For example, if you want to train a "make it vintage" LoRA, start with vintage images and use Flux Kontext to make them modern.

“Multi-Stage BACKWARD”:

For complex transformations, work backwards in multiple steps. If you want to train a "sketch to realistic portraits in a certain angle" LoRA, you may start with realistic portraits as “AFTER“ images then convert another angle, then convert to sketches to get the “BEFORE” images.

Quality Control

The BACKWARD method naturally ensures high-quality pairs because you're starting with your desired result. This eliminates the guesswork of traditional dataset creation.

Why the BACKWARD Method Works Better

Great Alignment: Since you start with the desired result, your input-output pairs are perfectly aligned.

Consistency Guaranteed: All your "after" images share the same style/characteristics because you curated them.

Efficient Creation: You can create large datasets quickly using AI editing tools rather than manual creation.

Predictable Results: You know exactly what the LoRA will produce because you started with those exact results.

Scalable Process: Once you establish the workflow, you can easily expand your dataset by finding more "after" images and processing them backwards.

Watch our video on training a custom Flux Kontext LoRA in Scenario

Was this helpful?