Introduction

After training a custom model in Scenario, evaluating and refining it is an important step to ensure it generates high-quality, consistent visual outputs.

Most models typically go through a small number of iterations, evaluation, or refinement before reaching their final best version. An iterative approach helps you identify strengths and weaknesses in your models, allowing you to make targeted improvements to enhance the quality of generation.

Part 1: Evaluating Your Model's Performance

1.1 - Test With Various Prompts

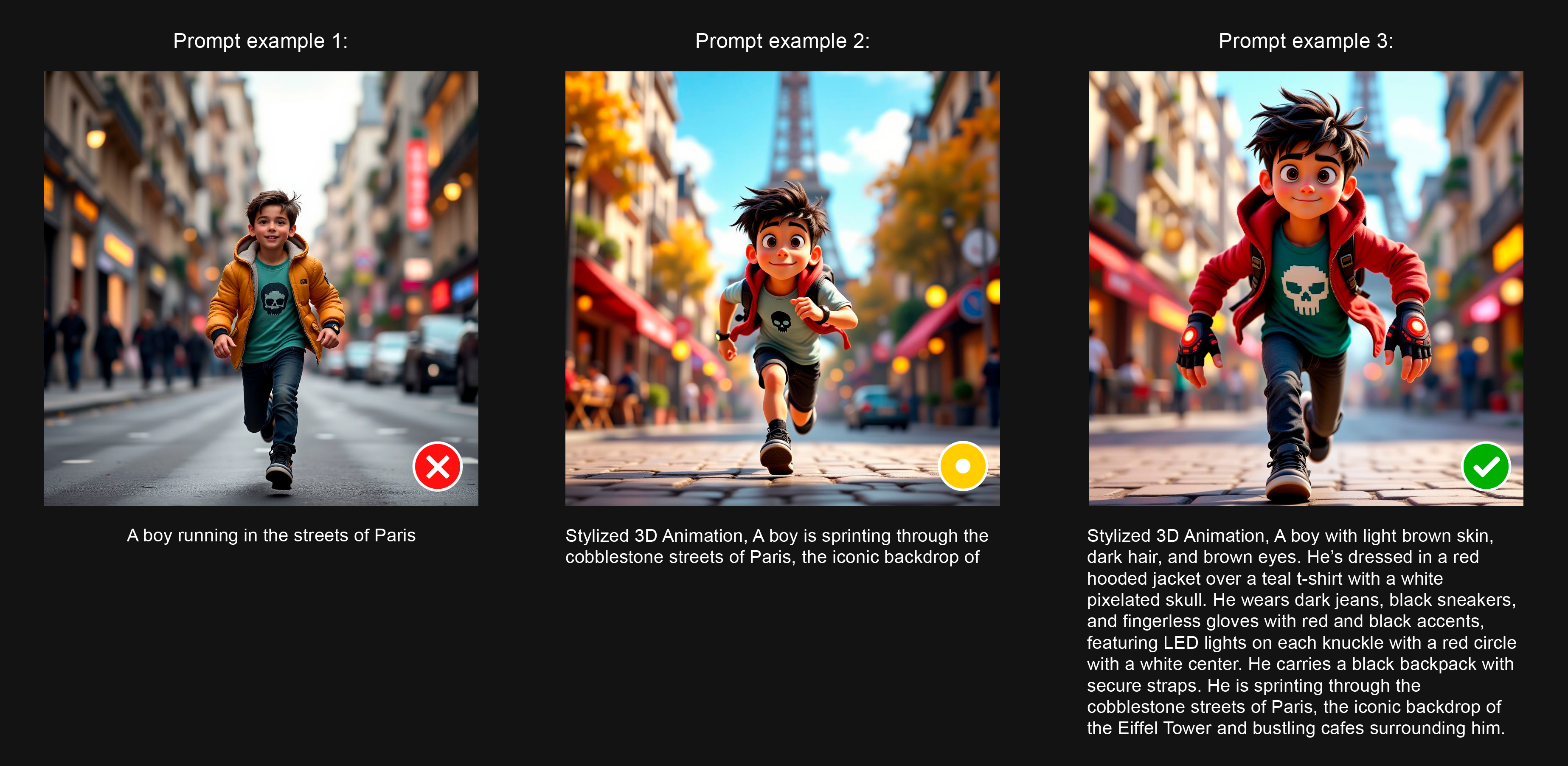

Start the evaluation by testing your model with a range of prompts to understand its capabilities and limitations. Start with simple, short prompts (5-10 words), and then move to more detailed, descriptive ones (20-50 words). Include different settings, scenes, or actions to assess versatility. For character models, test various poses, expressions, and outfits to verify consistency across different contexts.

1.2 - Use Prompt Spark to write better prompts, faster

It's key to understand that custom AI models and base/foundation models require different prompting approaches. Typically, effective prompts for custom models mirror the style & structure of the captions used during training. You can leverage Scenario's Prompt Spark tool to craft better prompts, and quickly evaluate how your model responds to various prompts.

For instance, generate relevant prompts tailored to your specific model using the "dice" icon. Alternatively, start with a few key words about your subject or scene, then use "Rewrite Prompt" (sparkle icon) to expand and refine them. You can also upload an image directly into the prompt box to automatically generate a descriptive prompt that represents the visual elements in the image.

Experiment to identify which prompt structure works best with your model and determine if you need to add style-related tokens to further guide and control the output.

1.3 - Test With Reference Images

Evaluate how your model performs when guided by reference images. Try different reference modes (Image-to-Image, ControlNet, etc.) and influence levels to see which combinations produce the most consistent results with your model.

1.4 - Identify Specific Issues

During the evaluation, document any patterns or issues you observe:

Style consistency: Does the model maintain the intended style across various prompts?

Detail accuracy: For character models, are key features like faces, hands, or clothing consistently rendered?

Prompt adherence: How well does the model incorporate elements mentioned in prompts?

Technical quality: Are there recurring artifacts, distortions, or quality issues?

Part 2: Improving Your Model

Once you've evaluated your model and identified areas for improvement, you can try retraining the model and test various approaches to further increase its quality. On the model page, access "Retrain" through the three-dot menu. This will duplicate all initial training images, captions, and settings, making them ready for adjustments.

2.1 - Adjust the Training Set

If prompting can’t sufficiently address the issues, consider reviewing and adjusting your training dataset. For instance:

Remove similar/redundant images if your training set contains too many visually close visuals. This helps prevent overfitting.

Remove inconsistent images that don't align with the intended style or subjects. Even a few outliers can significantly impact model performance.

Optimize the size of the dataset by focusing on the best, most representative images. 10-20 images is typically a good start, rather than using a very large set that might potentially confuse the model.

Add more diverse images that showcase elements your model struggles with while maintaining your core style. For example, if outfit details or faces are poorly rendered, include more zoomed-in, detailed examples of the outfit, or faces.

Replace lower-resolution images with higher-quality alternatives (1024px or larger). You can use Scenario's Enhance tool to upscale them.

2.2 - Refine Your Captions

Crafting high-quality captions is crucial for successful AI model training, as they directly influence how the model interprets and generates visuals from prompts. While Scenario's auto-caption feature provides a great starting point, it’s recommended to review and refine these suggestions to ensure they accurately capture the image's essence or emphasize the most important characteristics.

When writing captions, approach them as if describing the image to someone who cannot see it. Be thoroughly descriptive about key elements like subjects, colors, materials, styles, and distinctive features. You can separate these descriptions with commas for clarity. Words appearing earlier in captions may carry more weight during generation, so prioritize the most defining visual elements at the beginning of your captions.

Different base models respond best to different captioning approaches. For instance, SDXL typically performs better with shorter, more precise captions, while Flux accommodates both detailed descriptions.

For style models trained with larger datasets (40-50 diverse images) that share a consistent aesthetic, you can experiment with an alternative approach using no captions at all. In this specific case, expect to write longer and more descriptive prompts for the model to work as expected.

For character/subject models, consider incorporating a unique trigger word (especially with SDXL) to help the model consistently recognize and reproduce specific characters. This means creating a special, distinctive word that doesn't exist in common language (like "Robozilla" or "Valkyrie7") and including it in all your training image captions. Later, when generating images, simply add this trigger word to your prompt, and the model will immediately understand which specific character/subject to create.

Think of it as giving your character a unique name that only your model recognizes - when you use this name in prompts, the model instantly knows exactly which character you're referring to, including all its distinctive features and style.

For more information about advanced captioning: please visit Advanced Captioning.

2.3 - Adjust Advanced Training Settings

For more elaborate refinements, you can modify the technical parameters before retraining a model.

Lower the learning rate (e.g., from 1e-4 to 5e-5) for more subtle style learning and finer adjustments.

Increase training steps for more thorough learning of complex styles or characters.

It’s recommended to balance the Learning Rate with the Training Steps. Meaning, if you increase one, reduce the other to keep things in harmony.

For more information about Advanced Settings, please visit this page Advanced Settings

2.4 - Create Multi-LoRAs (Model Merging)

If retraining doesn't fully achieve the desired results, consider an alternative approach by merging your LoRA model with other complementary style models.

From the model page, click "Use This Model" > "Compose" and follow the steps. Test different combinations and adjust influence weights for each component to find the optimal balance while addressing any weaknesses identified during your evaluation.

For more information about Multi-LoRA models, please visit this page.

Part 3: Finalizing and Documenting

3.1 - Compare Different Model Versions

After implementing improvements, compare your different model versions to see which performs best.

Create a series of test images using exactly the same prompts and seeds across all model versions - this gives you an accurate, side-by-side comparison of results. Look at which version best fixes the issues you spotted during your evaluation process.

3.2 - Document Your Process and Findings

Finally, take some time to document what you've found about your improved model. Add pinned images or keep track of which prompts give you the best results.

Make note of any style tokens or descriptors that consistently improve your outputs, and possibly add them in the “Prompt Embedding” section. It might be helpful to record how different versions performed and add any valuable insights to your model description so you (and anyone else using your model) can get great results right from the start. Good documentation saves time and frustration when you return to a model after a break!

Final Tips for Effective Model Management

Make incremental changes by focusing on one element at a time to clearly identify what impacts performance. Be patient with the process, as finding the perfect balance typically requires several iterations. Generate sufficient test images (10-20) with varied prompts before concluding whether a refinement was successful.

Remember that different applications (concept art, character design, environment creation) may require different optimization approaches tailored to their specific needs. By systematically evaluating and refining your models, you'll develop expertise in creating consistent, high-quality visual assets that perfectly align with your creative vision.

Access This Feature Via API

Resources:

Generate Prompts: Scenario API Documentation - Prompt Generator

Generate Images (Text-to-Image): Scenario API Documentation - Generate Images

Recipes: Explore detailed guides and examples in Scenario's API Recipes to effectively implement these features.

Was this helpful?