IMPORTANT — As of December 2025, Runway Gen-4 References is only available via the Scenario API (not in the web UI). Please reach out to our team for more info

IMPORTANT — When using Runway Gen-4 References on Scenario, you may need to reference your input images as "image_1", "image_2", and "image_3" (depending on how many input images you’re using). This helps the AI models clearly understand which inputs and elements you want them to take into account. More information below

1. Overview

Gen-4 References enables you to use one or more images to generate new visuals that incorporate styles, characters, objects, or traits from those reference images. You can isolate a character from an image and place them in a different environment, modify elements of a character or scene, merge visual styles between multiple images, or synthesize components from various sources into a single cohesive output.

This tool is especially powerful when it comes to maintaining character consistency across different lighting scenarios, locations, and treatments — even when working from a single reference image.

In this article, you'll find guidance on optimal image inputs, effective prompting techniques, recommended workflows, and the types of results you can expect from using Gen4 References.

2. Strengths

Runway Gen-4 is particularly strong at generating consistent sequential images, especially when it comes to recurring characters or elements. While it's versatile across different visual styles, its standout capability is delivering realistic imagery.

The model supports up to three reference images and accommodates various resolutions, with a maximum of 720×720 pixels for 1:1 and 1280×720 pixels for 16:9 formats.

3. Referencing Images with "image_1", "image_2", and Beyond

Using consistent image labels such as "image_1", "image_2", and "image_3" in your prompts allows Runway Gen-4 to clearly understand which inputs should influence the output—and how. This approach is essential when combining elements across different references or when compositional control is important. Labeling images explicitly improves output quality, removes ambiguity, and enables finer creative direction across multiple stages of image generation.

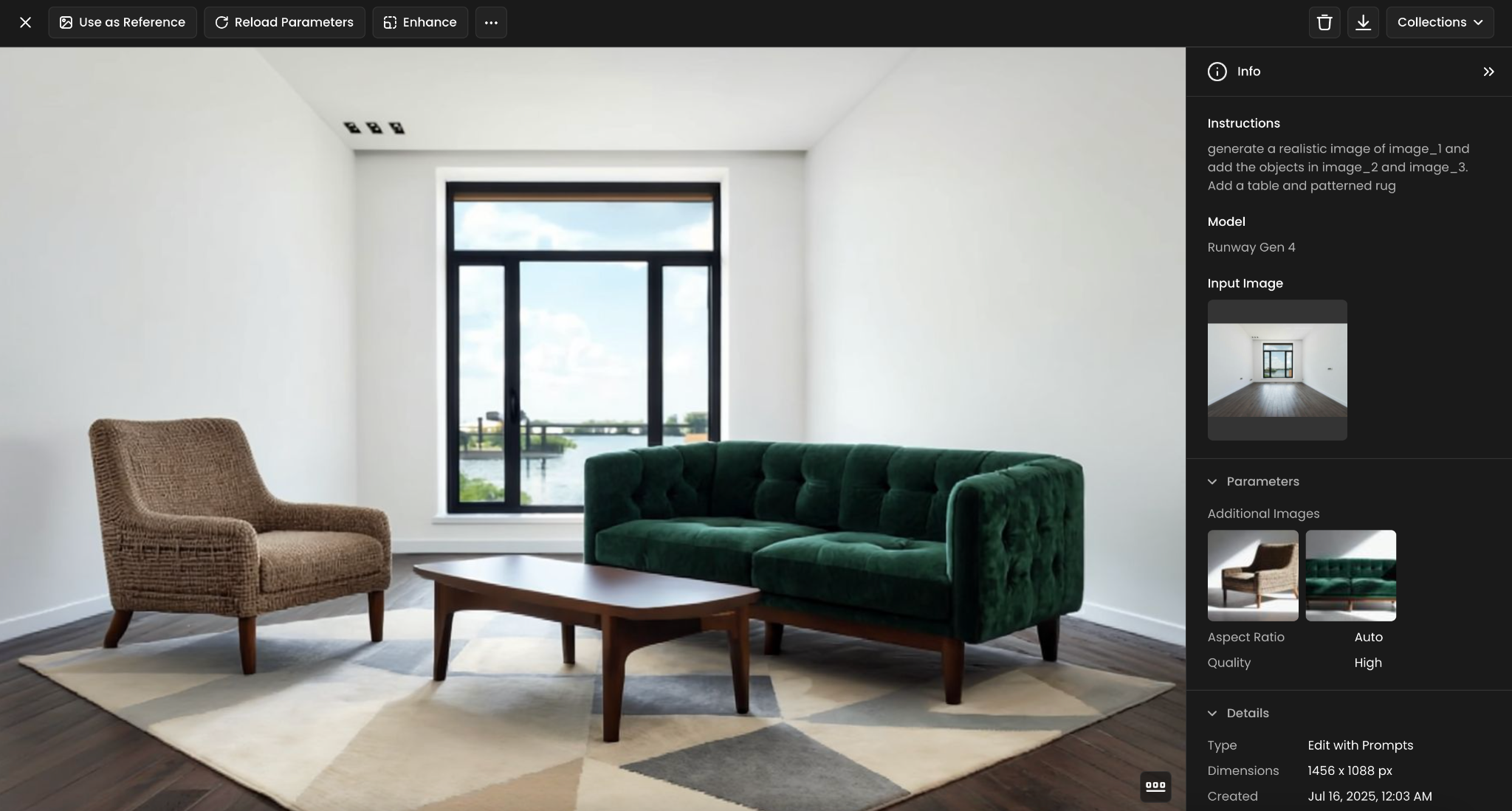

Take the following sofa composition as an example.

Prompt: generate a realistic image of image_1 and add the objects in image_2 and image_3. Add a table and patterned rug

In this case, "image_1" provided the base environment (an empty living room), while "image_2" and "image_3" contributed distinct furniture pieces. The resulting scene was composed realistically and coherently—thanks to the proper use of labeled image inputs.

This method gives users the ability to modularize scenes, reuse assets, and guide composition—all while maintaining clarity for the AI. Whether you're blending styles, inserting characters, or building sets, this labeling structure is foundational to successful multi-image prompting:

4. Use Cases & Examples

Below are some examples & use cases that demonstrate the versatility of Gen-4 References. They highlights a different way to apply reference images for creative control and precision. Because Gen-4 is still new, many creative possibilities remain unexplored. Don’t hesitate to experiment and go beyond the examples provided here.

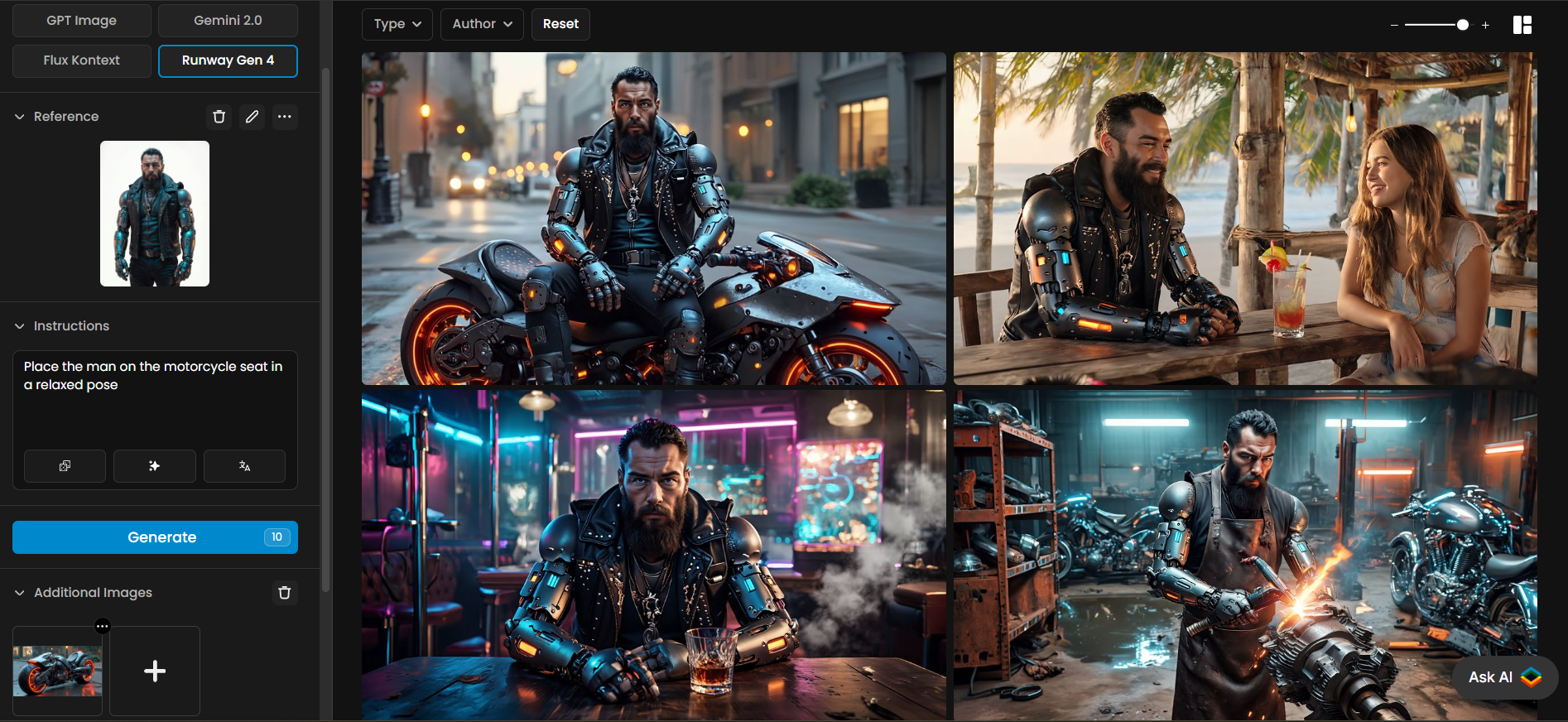

a. Character Consistency Across Scenes

Gen-4 References is especially effective for maintaining consistent visual identity across scenes with the same character. For example, a “cybernetic biker” can appear in various scenarios: seated in a relaxed pose on a futuristic motorcycle in the city, sharing a drink at a beachside bar, sitting confidently in a neon-lit nightclub, or working in a high-tech garage. Throughout each scene, the model preserves the character’s design, details, and proportions, ensuring coherence and recognizability from one environment to the next.

Note: The “Enhance” tool on Scenario can be leverage in post-processing by improving resolution, sharpening fine details, and correcting minor distortions - resulting in a cleaner, more polished final image. For more information, see Enhance

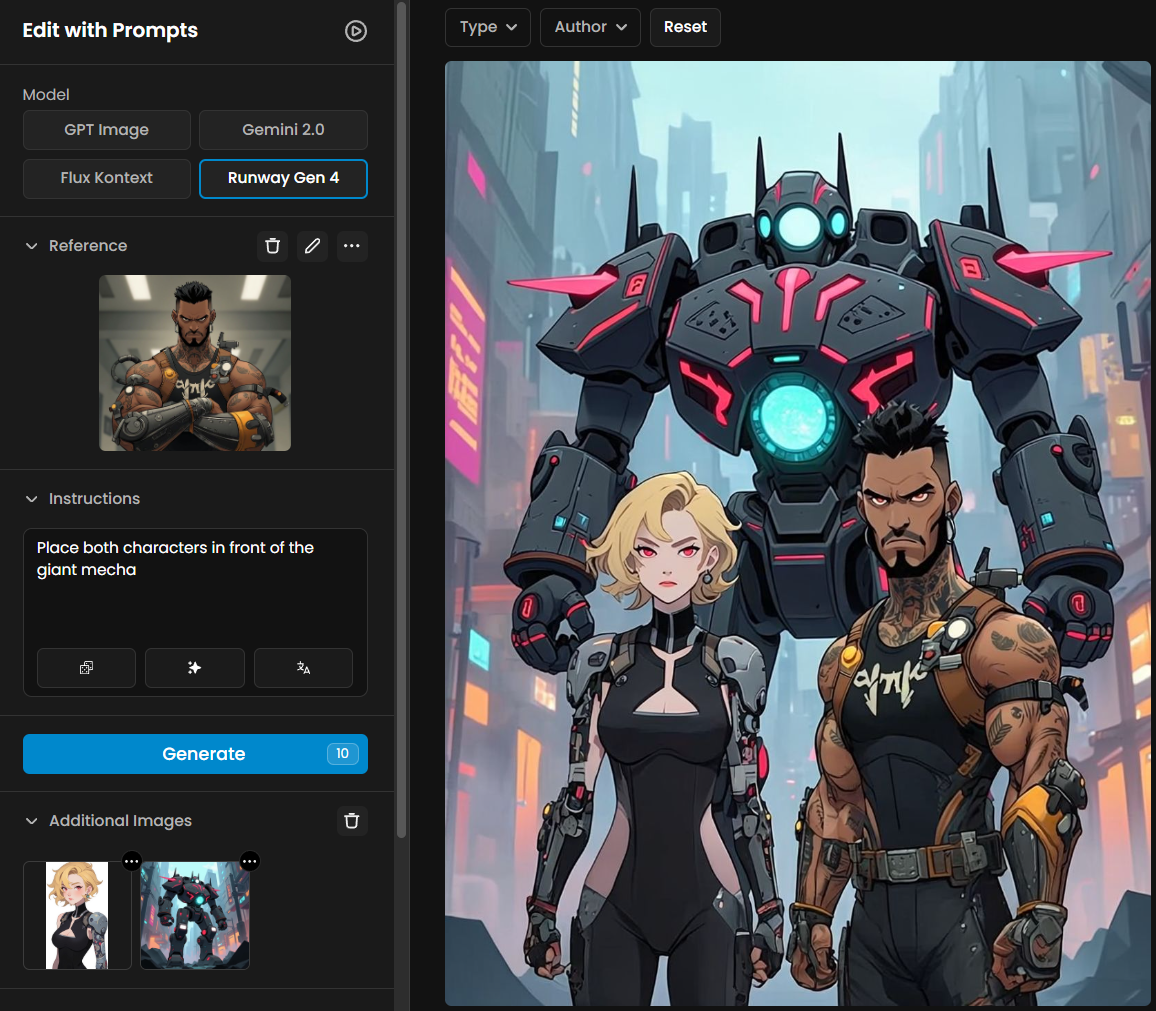

b. Blending Multiple Subjects in a Single Scene

Gen-4 References can seamlessly integrate multiple subjects into one cohesive image. In this example, two cyberpunk characters and a towering mecha are placed together in a futuristic cityscape. The model maintains consistent proportions, poses, and stylistic details across all elements, resulting in a unified and visually balanced composition.

c. Restyling Objects Across Genres and Themes

Gen-4 References enables the transformation of existing objects into new stylistic contexts—shifting their look and feel to match different genres, moods, or world building needs.

For instance, a standard car can be reimagined as a post-apocalyptic vehicle, with armored panels, rough textures, and improvised add-ons. The model adapts surface details and design cues to fit the desired theme while preserving the object’s overall identity—making it a valuable tool for rapid exploration of visual direction.

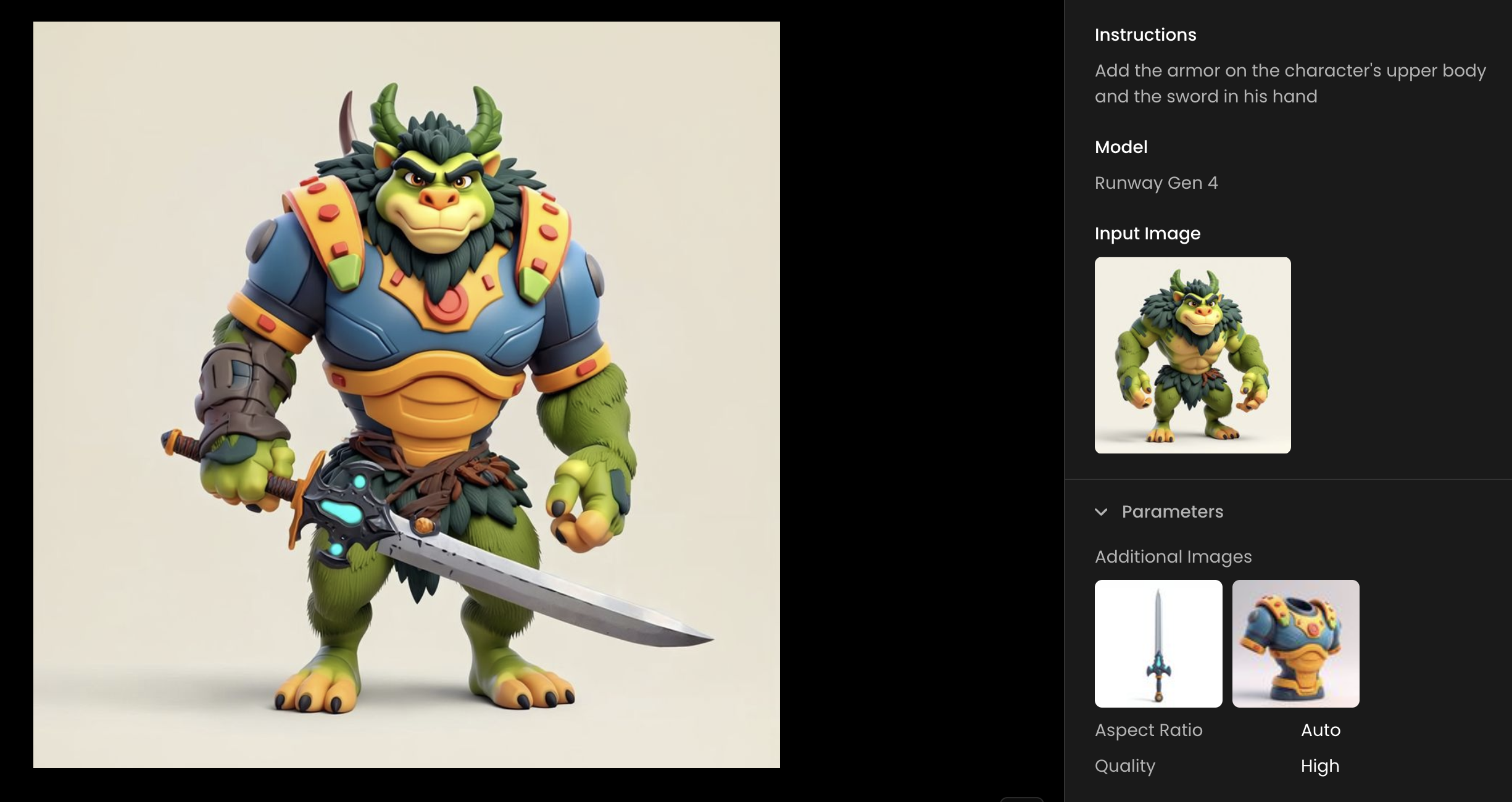

d. Apply Elements with a Single Image

Add the element you want to incorporate alongside your original subject. Draw an arrow connecting the element to where it should appear on the subject and include a simple text label describing the intended result.

Use this annotated reference image without additional prompting to achieve precise element placement while maintaining your subject's core characteristics.

e. Bounding Box to Guide Gaze

Add simple annotations to direct where your subject looks. Draw an arrow near the eyes and add accompanying text indicating the desired gaze direction.

Use this annotated reference image without additional prompting to achieve subtle yet precise control over your subject's attention focus.

f. Bounding Box to Guide Composition

Add simple shapes and drawings to your reference image to define compositional boundaries. Draw rectangles, circles, or other shapes with labels where you want specific elements to appear or align. Complement this visual guidance with text prompts to specify style and details for each bounded area.

This technique provides structural guidance for your composition while allowing creative freedom within the established framework, resulting in more balanced and intentionally arranged scenes.

g. Brand and Style Transfer

Add simple shapes and drawings to your reference image to define compositional boundaries. Draw rectangles, circles, or other shapes with labels where you want specific elements to appear or align. Complement this visual guidance with text prompts to specify style and details for each bounded area.

This technique provides structural guidance for your composition while allowing creative freedom within the established framework, resulting in more balanced and intentionally arranged scenes.

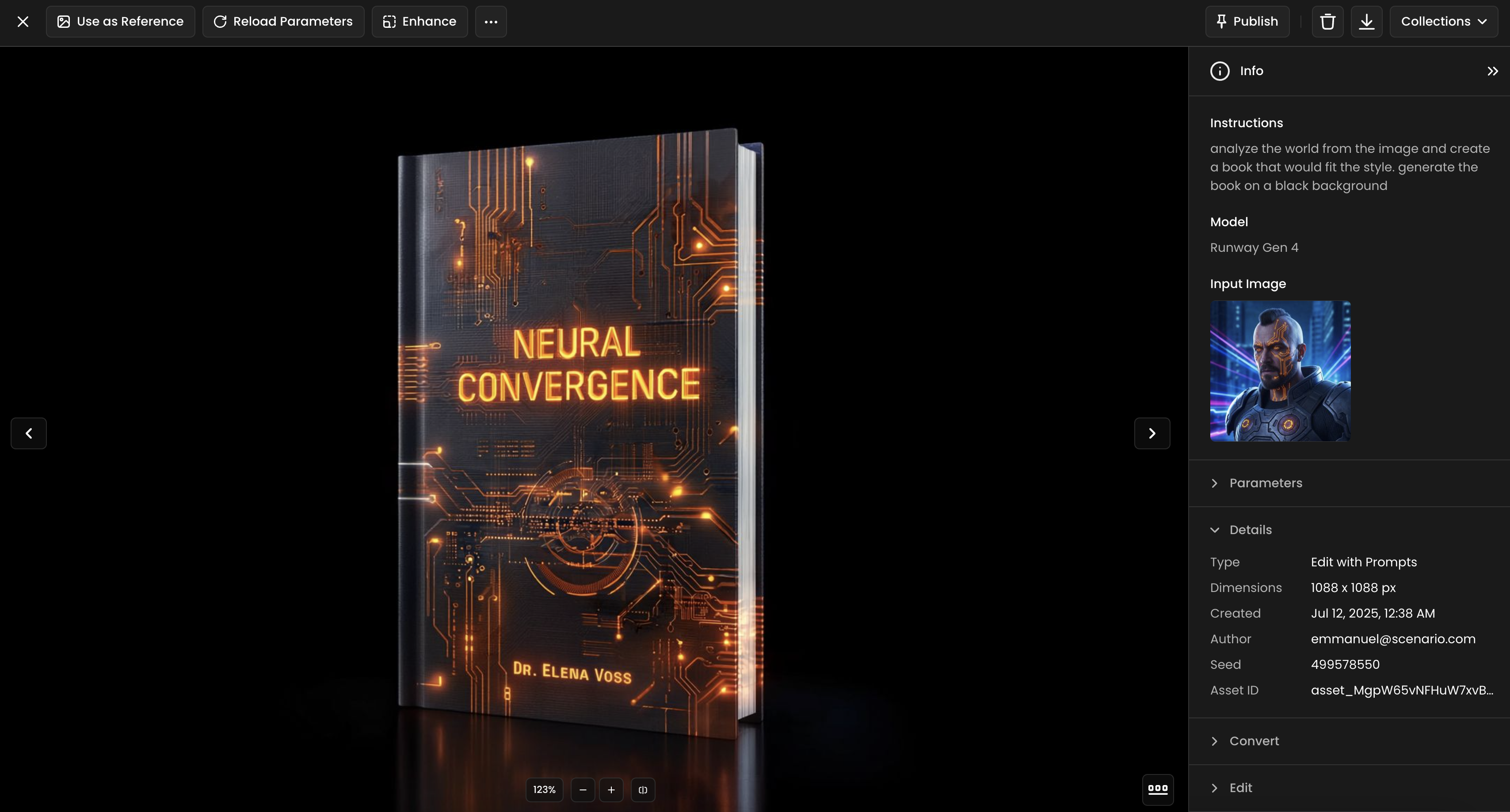

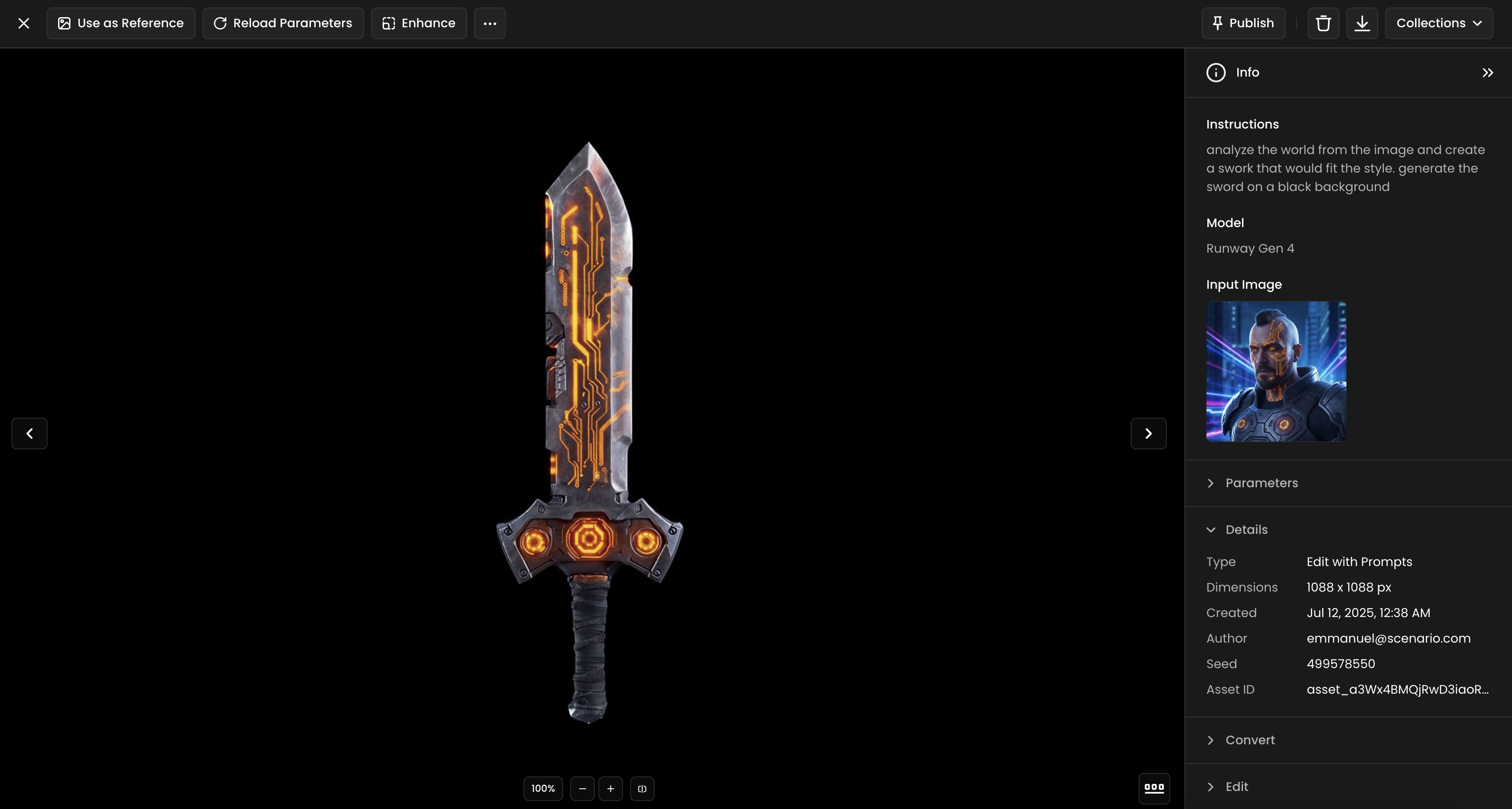

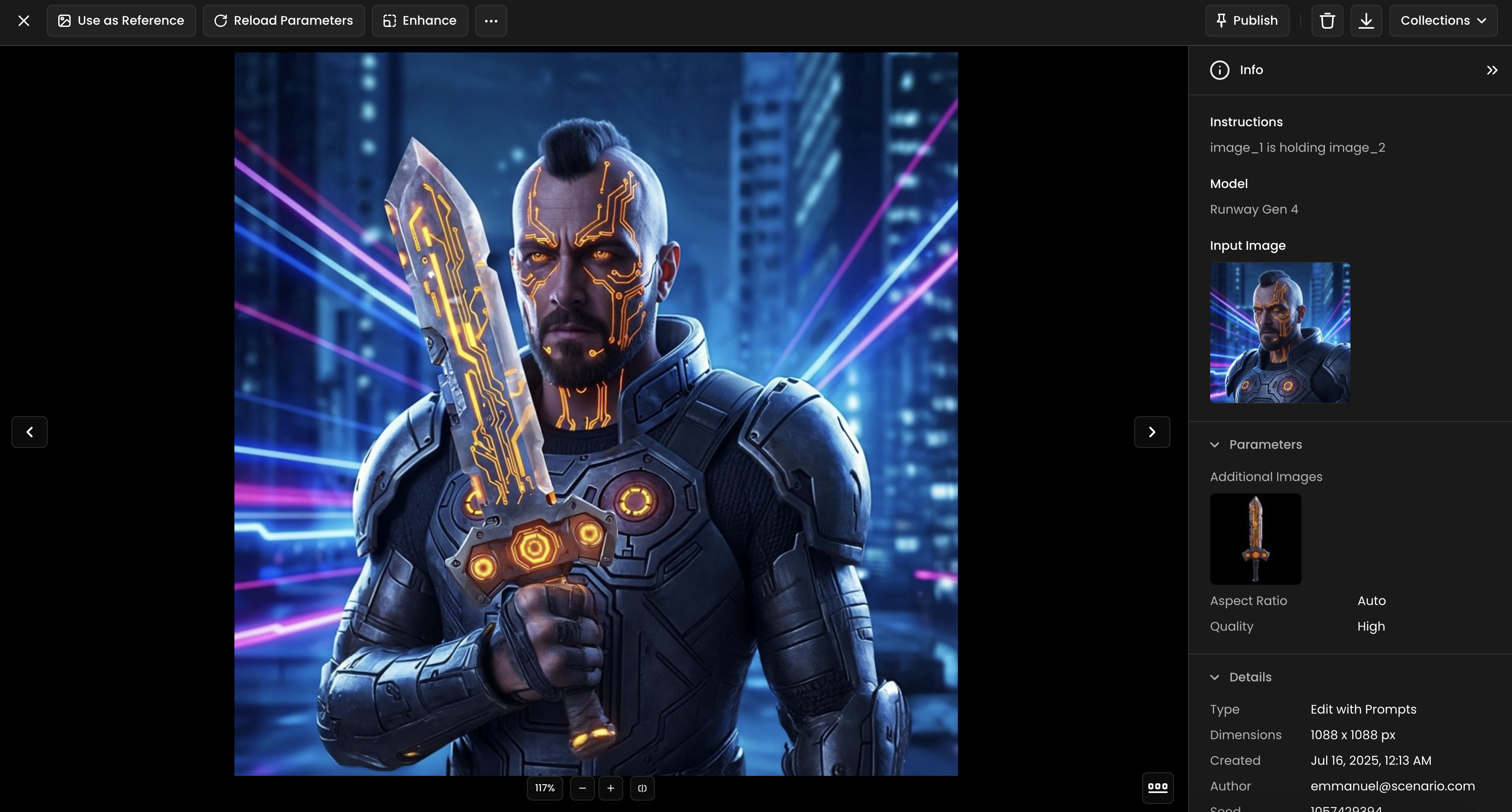

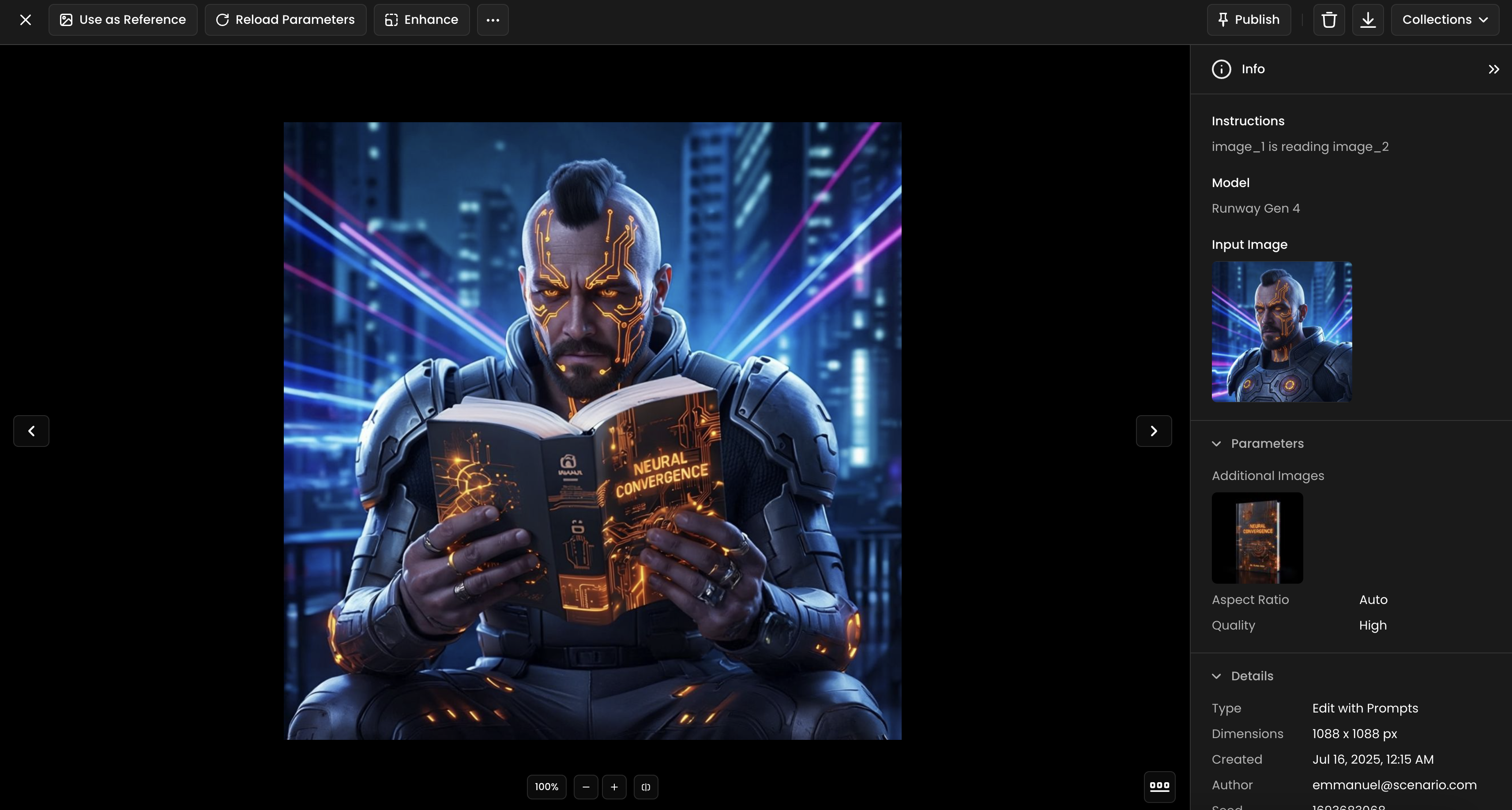

h. Building Worlds with Stylized Objects

Gen-4 References allow you to expand a single starting image into a cohesive visual world where new objects and scenes can be generated that naturally belong together.

This approach maintains consistency across all elements while enabling these new objects to interact with your original subject when reused as references, creating an immersive composition without the need for multiple source images.

Prompt: analyze the world from the image and create a book that would fit the style. generate the book on a black background

Prompt: analyze the world from the image and create a sword that would fit the style. generate the book on a sword background

After creating the objects, you can then prompt for your character to interact with them:

image_1 is holding image_2

image_1 is reading image_2

i. Colorizing Black and White Images

Use a black and white reference image paired with a prompt requesting colorization or restoration.

For more control, specify color preferences for key elements or request period-appropriate coloring for historical images.

Input image | Prompt | Output |

Colorize the image in | ||

Restore and colorize the photograph from |

j. Designing Interiors

References transform home or set staging by allowing you to upload a single image of an interior space and then add furniture references to place them directly into the scene.

This streamlined approach lets you visualize different design options within a space, creating realistic and cohesive room compositions without physically moving furniture or relying on imagination alone.

Input 1 | Input 2 | Input 3 | Prompt | Output |

Generate a realistic image of |

Try combining this approach with bounding boxes and multiple elements in a single image for additional control.

k. Stylizing Images

Combine two reference images to transfer artistic styles between subjects or environments.

Use one image to define the desired visual aesthetic and another to provide the subject or scene structure.

Input 1 | Input 2 | Prompt | Output |

Analyze the art style from |

l. Stylizing Text

Use two reference images to transform text presentation styles.

Include one image showing your desired handwritten or typographic style and another containing the text content you want to modify.

Input 1 | Input 2 | Prompt | Output |

Respect the composition and draw |

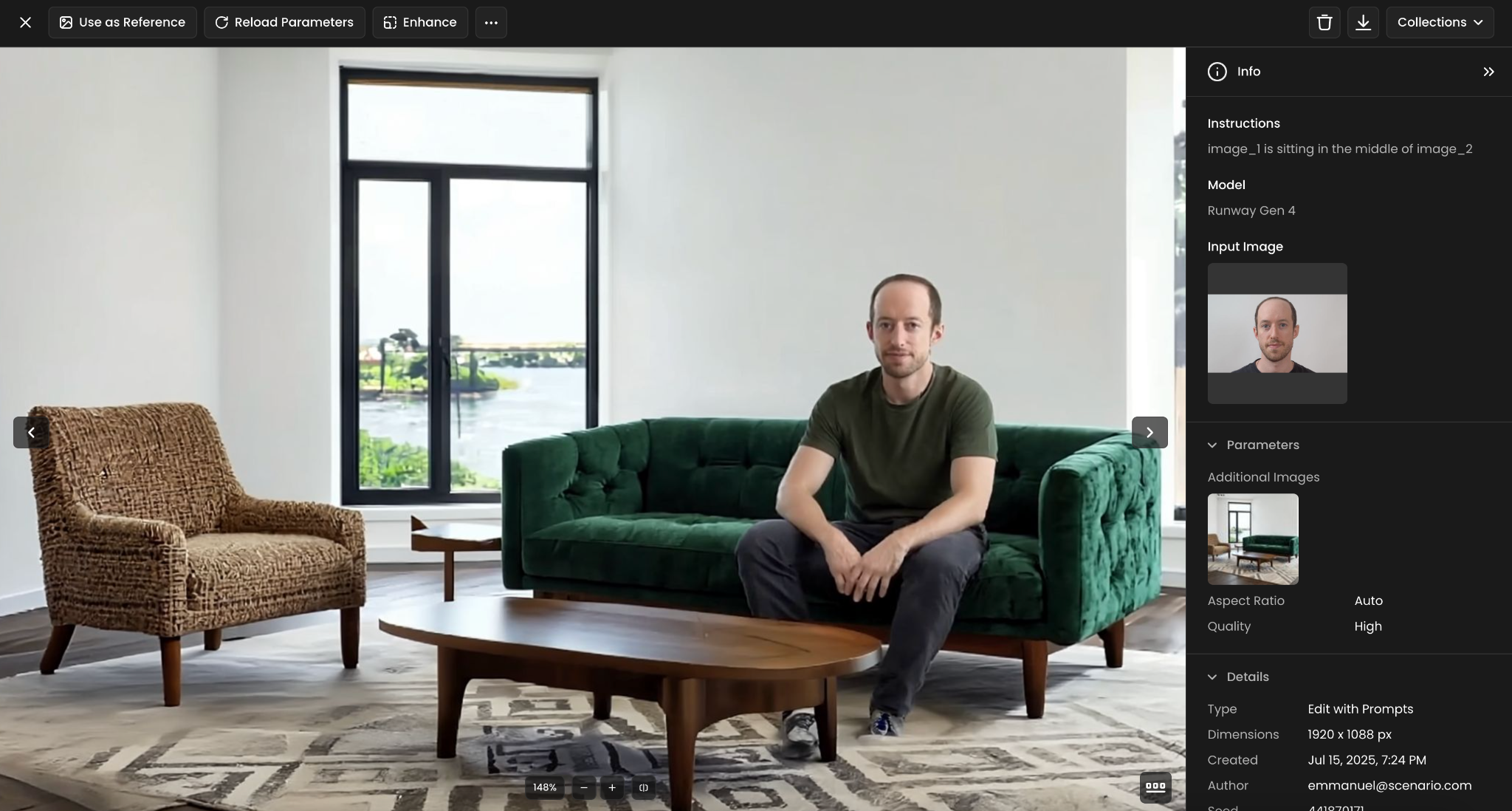

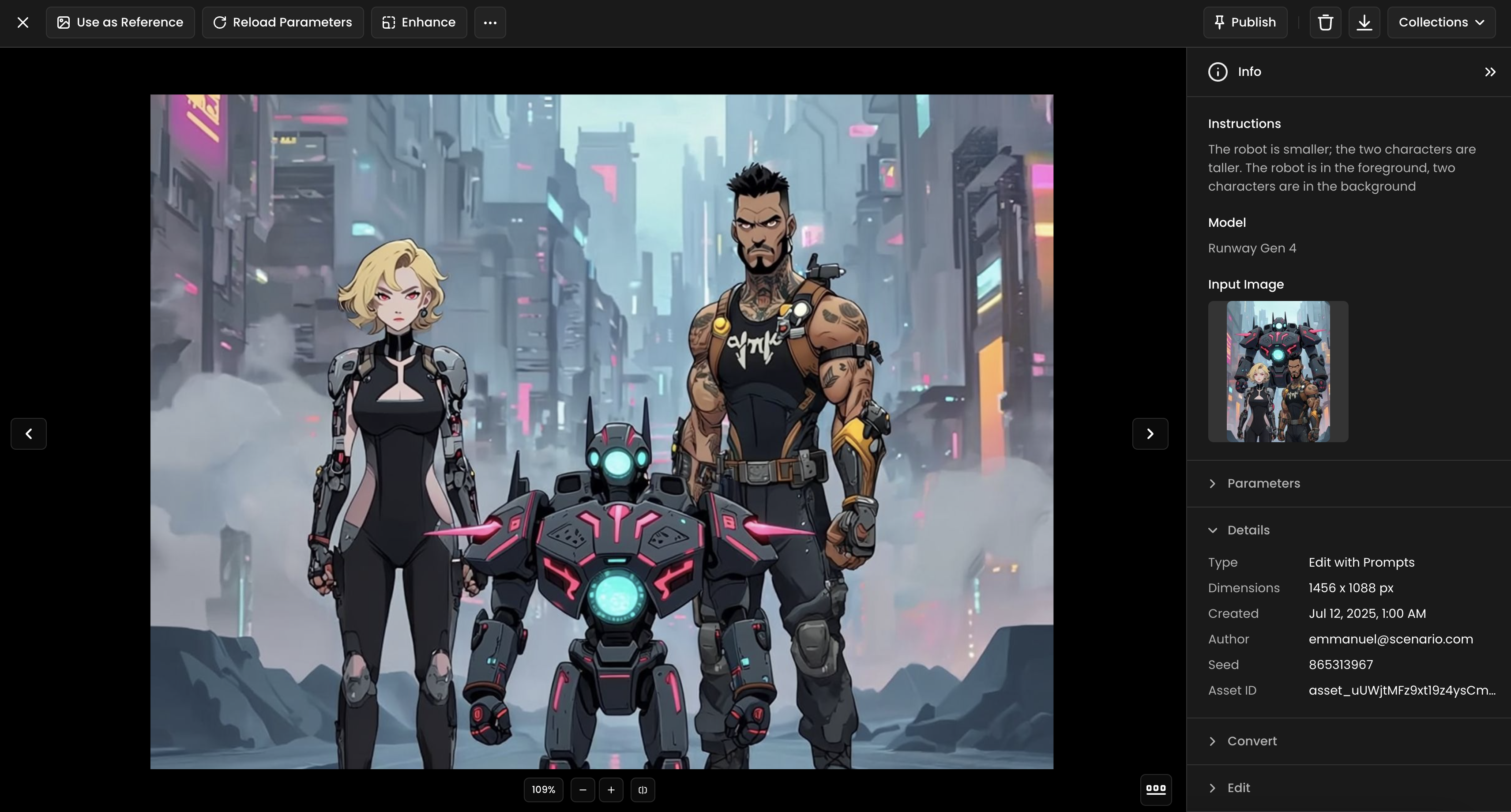

m. Reposition and Resize Characters

Use Gen-4 References to adjust composition by isolating and modifying specific character or elements. In this example, the original robot character was resized and repositioned into the foreground, allowing it to stand alongside the two human figures without overwhelming the scene. Try using this technique to emphasize scale, shift focus, or establish new dynamics between subjects in a scene.

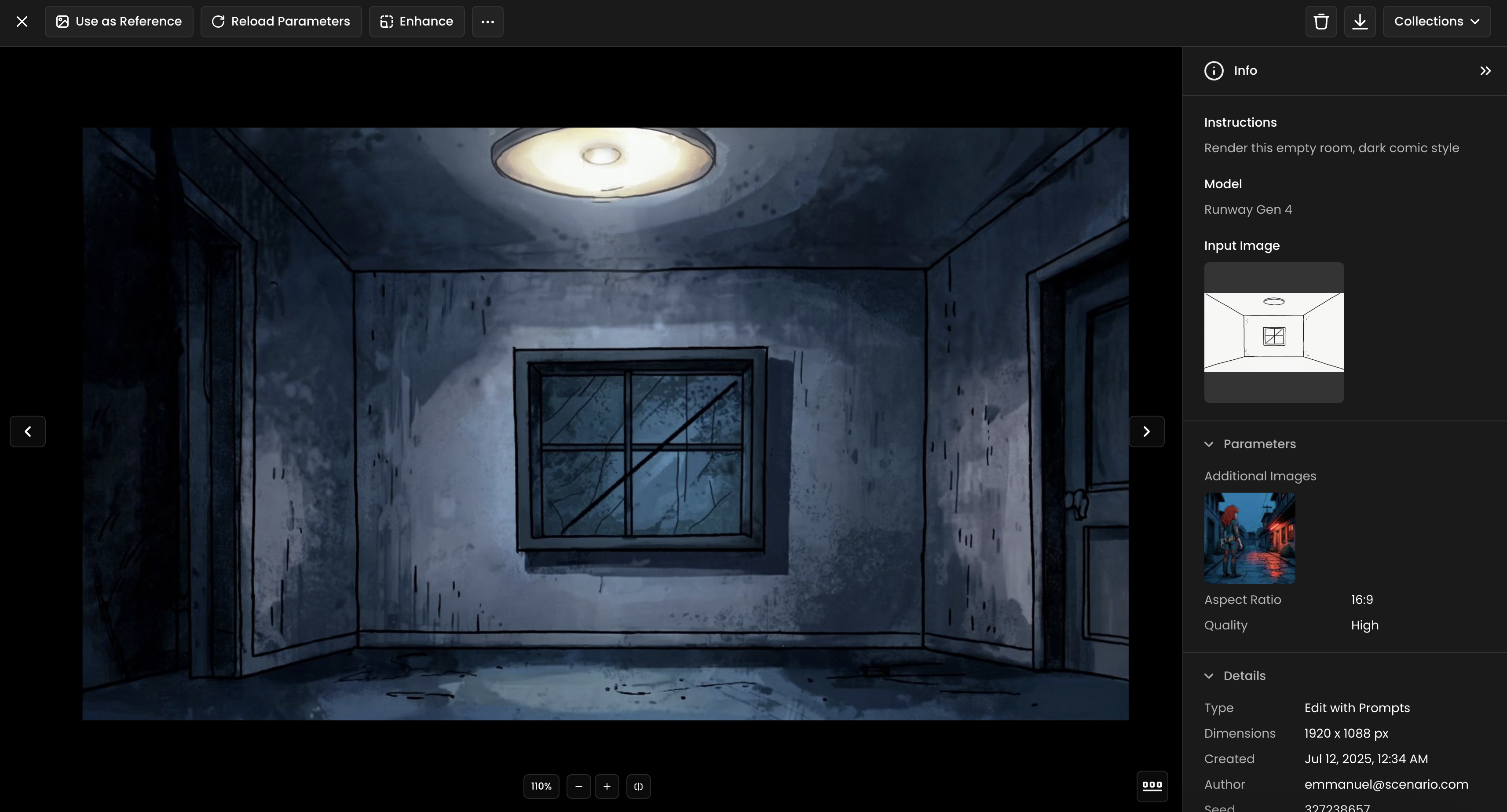

n. Render Sketches

Use a simple sketch as your starting point to generate rendered environments or subjsct. In this example, a basic outline of an empty room was transformed into a fully rendered interior in a dark comic style. Try experimenting with different drawing styles and mood prompts to explore how Gen-4 interprets your ideas.

5. Conclusion

Runway Gen-4 References is a highly capable image generation model that combines visual coherence with creative flexibility. Its strengths—consistent character rendering, multi-subject integration, and support for stylistic variation—make it well-suited for a wide range of creative workflows. From visual development and storytelling to rapid iteration across genres, Gen-4 empowers creators to translate complex ideas into compelling visual narratives.

It’s important to note that Runway Gen-4 References has specific content guidelines and might limit your generations.

Was this helpful?